The lack of data anonymization standards requires customers to flip a coin in selecting these solutions to protect their sensitive data.

Many new data anonymization technologies have been launched to serve data scientists and ML engineers, but without a standard measure of privacy and utility, customers can’t make objective choices on which technology to use. These products can’t be compared with one another, and the result is that customers are flying blind and solely reliant on generalized statements regarding each product’s value.

Data anonymization protects the confidential data of individuals in a dataset, and thus, enables a safe innovation sandbox for product creators to solve problems without the risk of a breach and the news headlines that follow. Original sensitive datasets are made “safe” from violating the privacy of any individual in the original dataset and can be used for discovery, visualization, analytics, and building ML applications and models in a development environment. Most important to data science and ML customers are the data privacy and data utility metrics of the safe anonymized data created, but no such standardized metric for them currently exists, making it impossible to intelligently compare competing anonymization products.

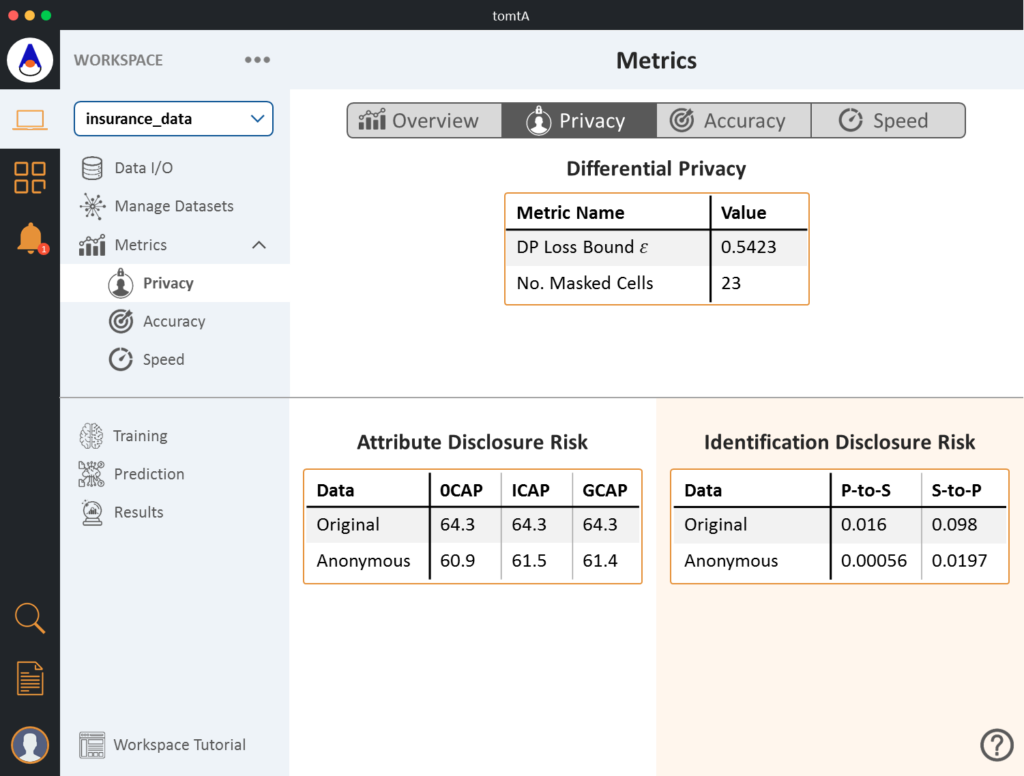

Consider the way classical differential privacy based anonymization products are being hawked today. Academic papers on differential privacy prove mathematical results that guarantee privacy based on several assumptions and a privacy loss bound parameter ε. Purveyors of classical differential privacy based technologies expect their customers to understand aforesaid mathematics and trust them to protect the privacy of their confidential data. The value of ε is a measure of how well the data can or should be anonymized, not a measure of how well it has been anonymized. Nevertheless, some companies are peddling ε as a metric that measures the privacy of their anonymized data. It’s like asking someone to board an aircraft based on the veracity of Bernoulli’s principle.

Classical differential privacy based technologies have bad data utility because they produce aggregate statistics of the original data rather than individual micro-data.

1. Aggregate statistics require that users know what they want to see—they can therefore query for these statistics. However, a large part of data discovery is random exploration and experimentation in a sandbox where access to the full set of individual micro-data is often crucial to success.

2. Individual micro-data, not aggregate statistics, are used to train ML models.

Anonymization technologies that produce individual micro-data (the holy grail of data utility metrics) include synthetic data and atomic anonymization. But they are not at all equal. Atomic anonymization was created because synthetic data generation fails on so many levels when applied to anonymization.

Synthetic data generation with or without differential privacy is the wrong technology for anonymization. Synthetic data requires modeling the probability distribution of the points in a training set to create “fake” data by sampling from this distribution. This technique works well when there are a sizable number of homogeneous points in the training dataset, such as digital images and signals. However, the synthetic data technique fails for anonymization because:

1. Structured tabular data typically subject to anonymization is neither sizable nor homogeneous, and therefore utility measurements on the “fake” data prove to be bad.

2. Sampling more points to counter these bad utility measurements causes privacy metrics to plummet.

3. Patching synthetic data generation up with classical differential privacy (the so-called differentially private synthetic data technique) for the sake of better privacy creates far too much noise for it to be useful.

Universal performance metrics to measure data privacy and utility are critical in our industry to educate customers so that they can choose the right solutions to achieve their objectives and remain compliant with data privacy regulations.

What constitutes good utility and privacy metrics, and what does it take to standardize them? Stay tuned.